Fingerprint analysis is partly subjective, and this can lead to errors. A 2009 study by the National Academy of Sciences (link below) found that analyzed results were not always repeatable. An experienced fingerprint examiner might disagree with their own past conclusions when examining the same set of prints at a later date. This kind of uncertainty can lead to innocent people being wrongly accused, and guilty people allowed to remain free.

Scientists from NIST (National Institute of Standards and Technology) and Michigan State University have recently developed a new fingerprint analysis algorithm to automate a key step in fingerprint analysis, and greatly reduce errors that may be due to human subjectivity.

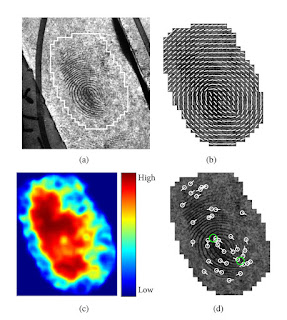

At a crime scene, found fingerprints, called latent prints, are often partial, distorted or smudged. The print may be left on something with a background pattern that makes it hard to separate the print from the background. When crime scene prints go for analysis, the first step is to judge how much useful information they contain. Prints deemed useful are submitted to the AFIS (Automated Fingerprint Identification System) database to search for matches.

If a fingerprint doesn’t have sufficient information, it could lead to erroneous matches. This is why the first step of evaluating latent prints is so important, and why the work has been done to create an algorithm to judge prints more consistently, accurately and efficiently. Time saved can be used to reduce the backlog of prints, solve crimes more quickly, and allow more time to be spent on challenging prints.

Scientists used machine learning to build their algorithm – training the computer to recognize patterns with known prints. Prints were given a quality score, from 1 to 5. In practice, prints with too low a quality score to create an accurate match should be pulled and not sent on to AFIS for matching.

What has enabled the breakthrough, besides advances in machine learning and computer vision, is the availability of a large set of fingerprint data for testing and analysis. The Michigan State Police allowed researchers the use of a large database of prints after the prints were stripped of identifying information to protect privacy.

Still, a larger data sample is desired to continue to refine the algorithm. Elham Tabassi, a NIST computer engineer states, “We’ve run our algorithm against a database of 250,000 prints, but we need to run it against million. An algorithm like this has to be extremely reliable, because lives and liberty are at stake.”

Scientists Automate Key Step in Forensic Fingerprint Analysis

Published August 14, 2017 on www.nist.gov

Strengthening Forensic Science in the United States: A Path Forward (2009)

National Research Council of the National Academies

No comments:

Post a Comment